How a Human Factors Systems Framework Can Provide a Different Lens

The most recent view of human factors as a discipline is that humans are an element of a sociotechnical system, these are systems incorporating humans and technology (Salmon et al., 2011; Wilson, 2014).

When designing tasks, equipment, work environments, organisational structures or reviewing incidents and accidents, we should always consider humans in that context. In review and design, we should capture the complexity of sociotechnical systems. This means that we should not just consider individual actions, but also the influence of conditions, demands and pressures on those actions. We should redesign the context of work, not just retrain an individual worker, when things go wrong.

Many system analyses are represented in complex diagrams (see for example Salmon, Cornelissen and Trotter, 2012) often overwhelming those new to systems thinking.

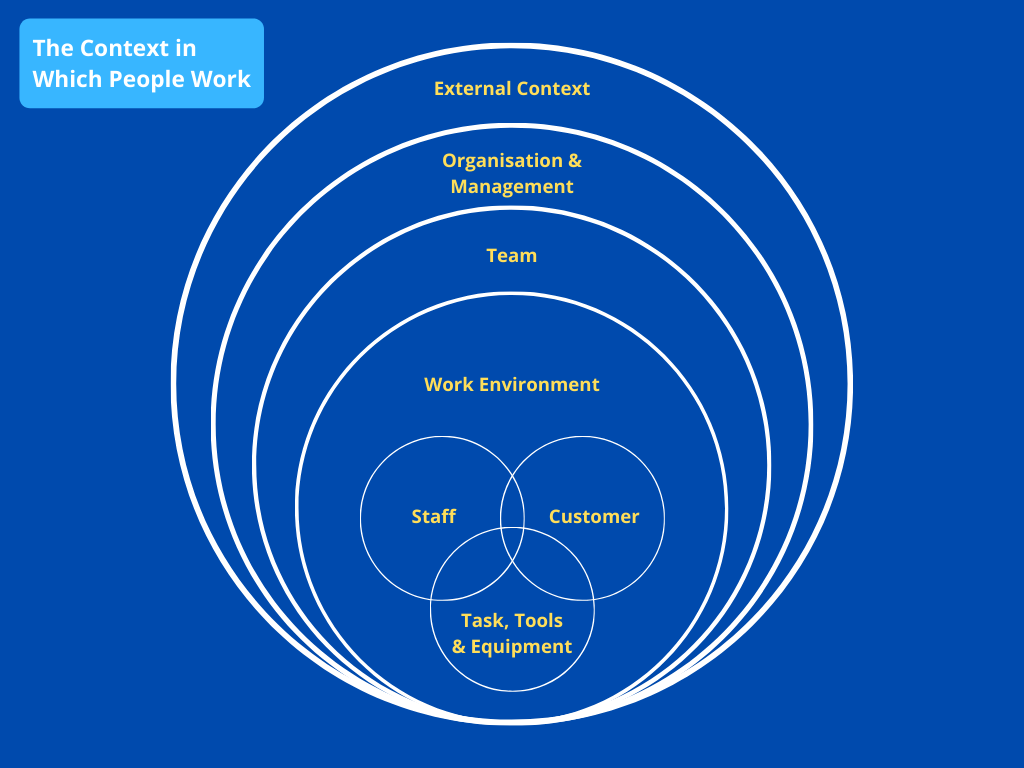

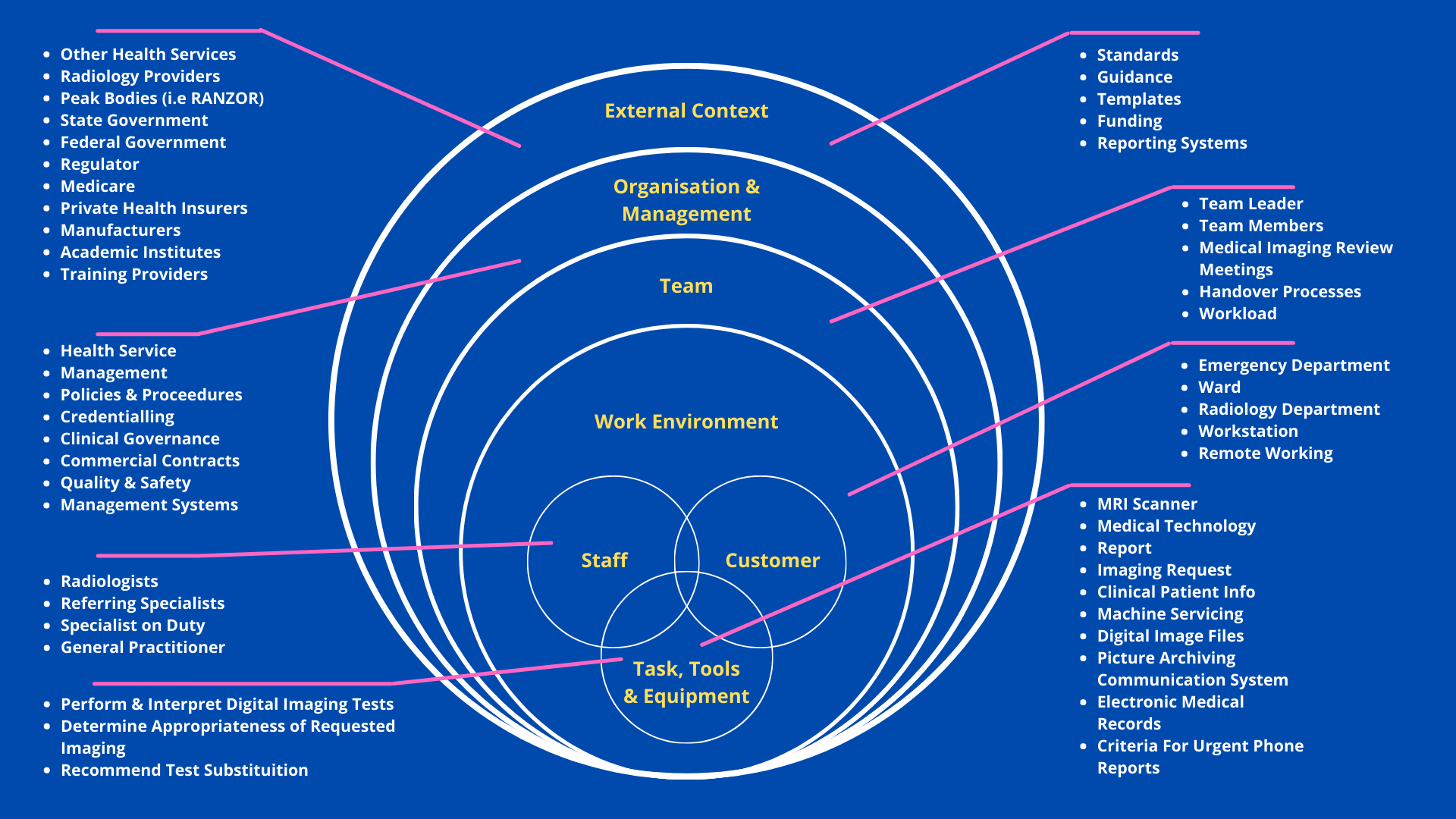

To provide a more accessible analogy as a first introduction to systems analysis, we can consider the context within which people work as layers of an onion (see Figure 1). At the core, are staff and customers and the tools and equipment they interact with for the tasks they are performing. These interactions not only happen within a physical work environment but also a team environment and the wider organisation and management structure.

The organisation does not exist within a vacuum but sits within a certain demographic area and interacts with external agencies including other operators, organisations, government agencies and regulators – the external context.

This Insights paper is the first of two papers that discuss human factors and systems thinking. It will focus on how a human factors systems framework adds value to incident and accident investigation. A second paper will focus on the benefits a systems framework can offer in design projects.

A System Lens in Incident Investigation

Incidents and accidents are the result of multiple contributing factors across a sociotechnical system (or layers of the onion, in line with the analogy in the introduction). They can be found in people and equipment operating close to the accident scenario, and also in decisions and actions further removed from the accident both in time and place within organisations and external agencies such as regulators and government departments. Unfortunately, a systems lens is not always applied in investigations resulting in missed opportunities for systemic improvements.

Countering the Urge

Whenever an incident or accident happens, the public, management, government and media often look for a quick answer. The urge to make sense of what happened and to find a person or something to blame is a human tendency. It is natural for the public and for anyone affected to seek answers.

Those responsible for investigating an incident or accident will need to counter the demand for a quick answer. The goal of a safety investigation is to understand what happened and, more importantly, why it happened. It also needs to identify lessons learned and recommend safety actions to prevent it from happening again (or minimise the consequences if a similar event were to happen again).

Human Factors or ‘The Human Factor’

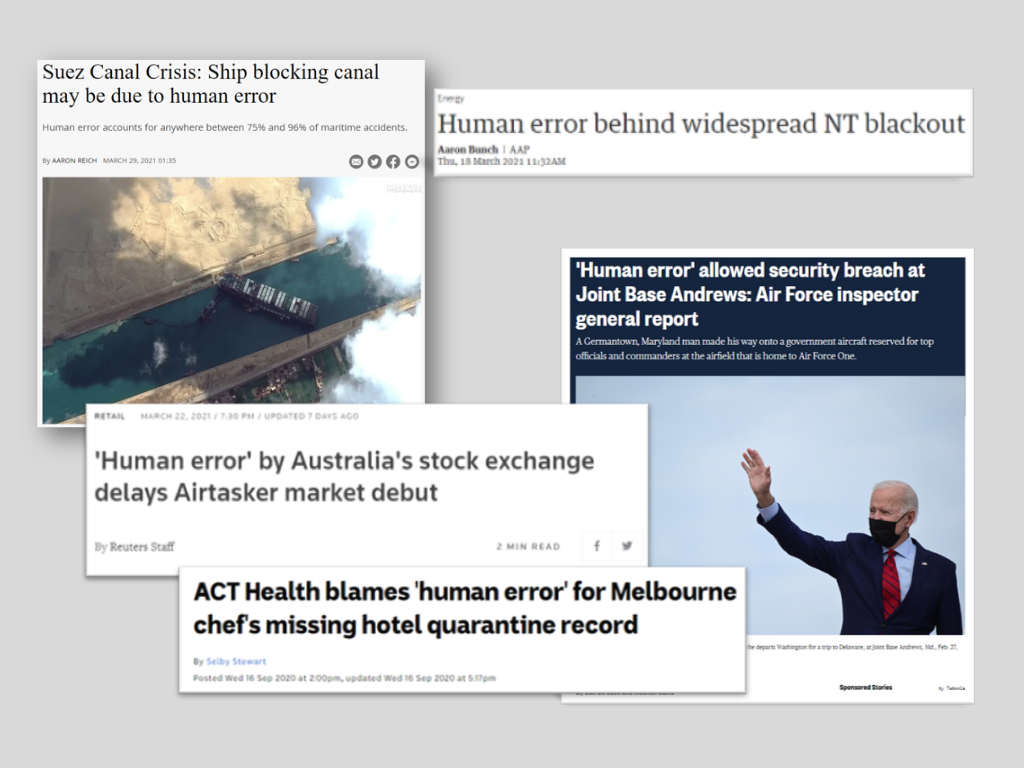

Unfortunately, the colloquial term ‘the human factor’ or ‘human error’ is often used to describe the cause of an incident or accident (see Figure 2 for some examples). Such statements immediately focus attention on a person involved in the task, rather than appreciating the context of work and the system they were operating in. Thus missing the opportunity to apply systems thinking and shine a spotlight on and find opportunities to improve the system holistically.

This view of humans and human error remains entrenched. This is despite the view that ‘errors are a symptom of faulty systems, processes and conditions’ arising in the 1990s such as captured in the influential work by James Reason (Reason, 1990; Reason,1997) and Jens Rasmussen (Rasmussen, 1997) and later by colleagues such as Erik Hollnagel, Sidney Dekker and David Woods (Hollnagel, 2004; Dekker, 2006; Woods et al., 2010).

Further, most accident investigation authorities in the United Kingdom, United States, Canada and Australia use a systems-based accident analysis method (see for example ATSB, 2007).

System Lens

To develop recommendations that are effective and have an impact on the system, investigators have to apply a structured process to systematically analyse factors across the system, or ‘peel back the layers of the onion’, rather than find a quick answer and apply a quick fix. They have to look beyond ‘human error’ and understand the context within which people work and why people’s decisions and actions made sense to them at the time (in line with a just culture approach).

There are a few starting points to analyse an issue or event with a systems lens. For example:

Who and what is part of the system?

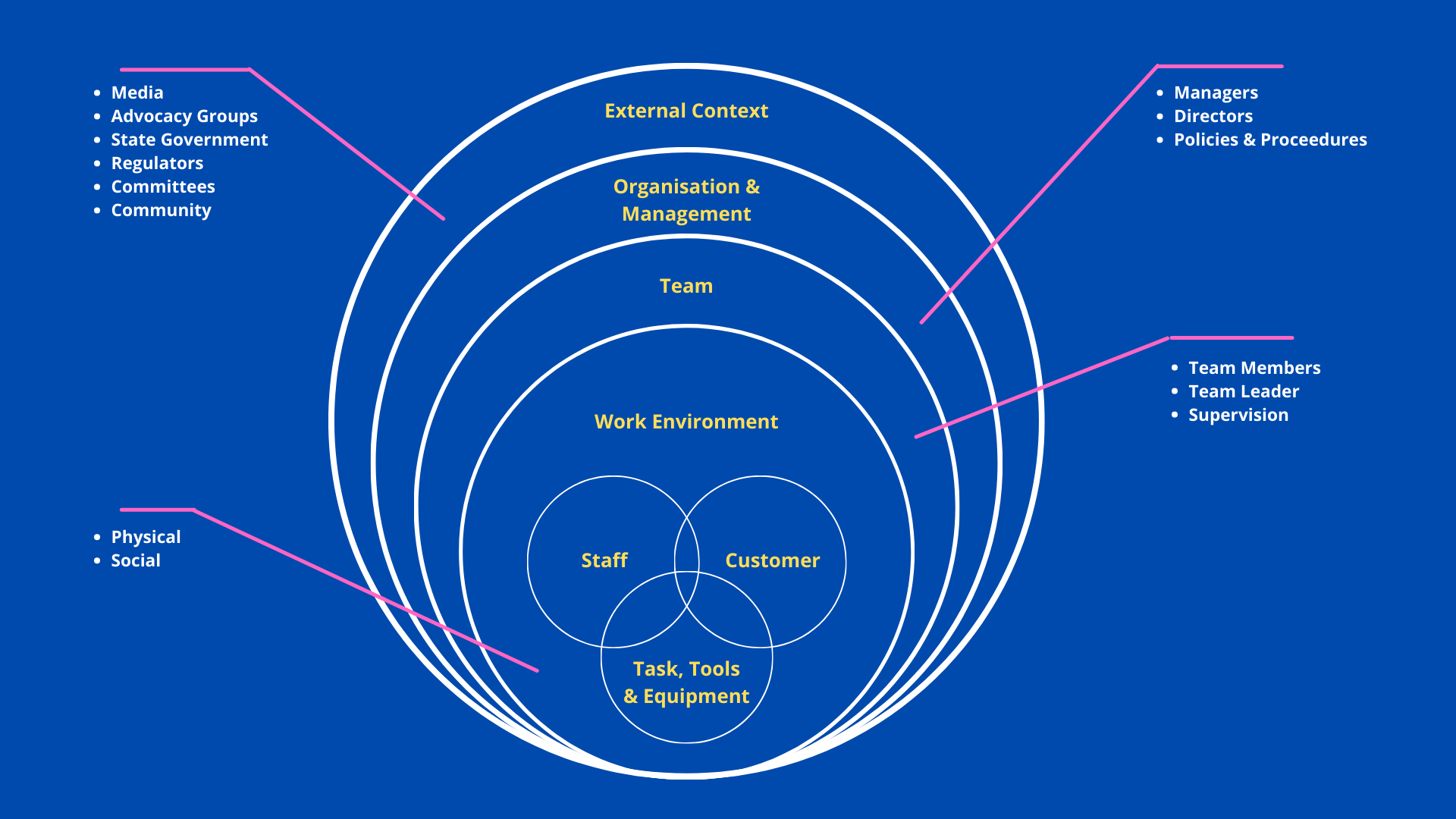

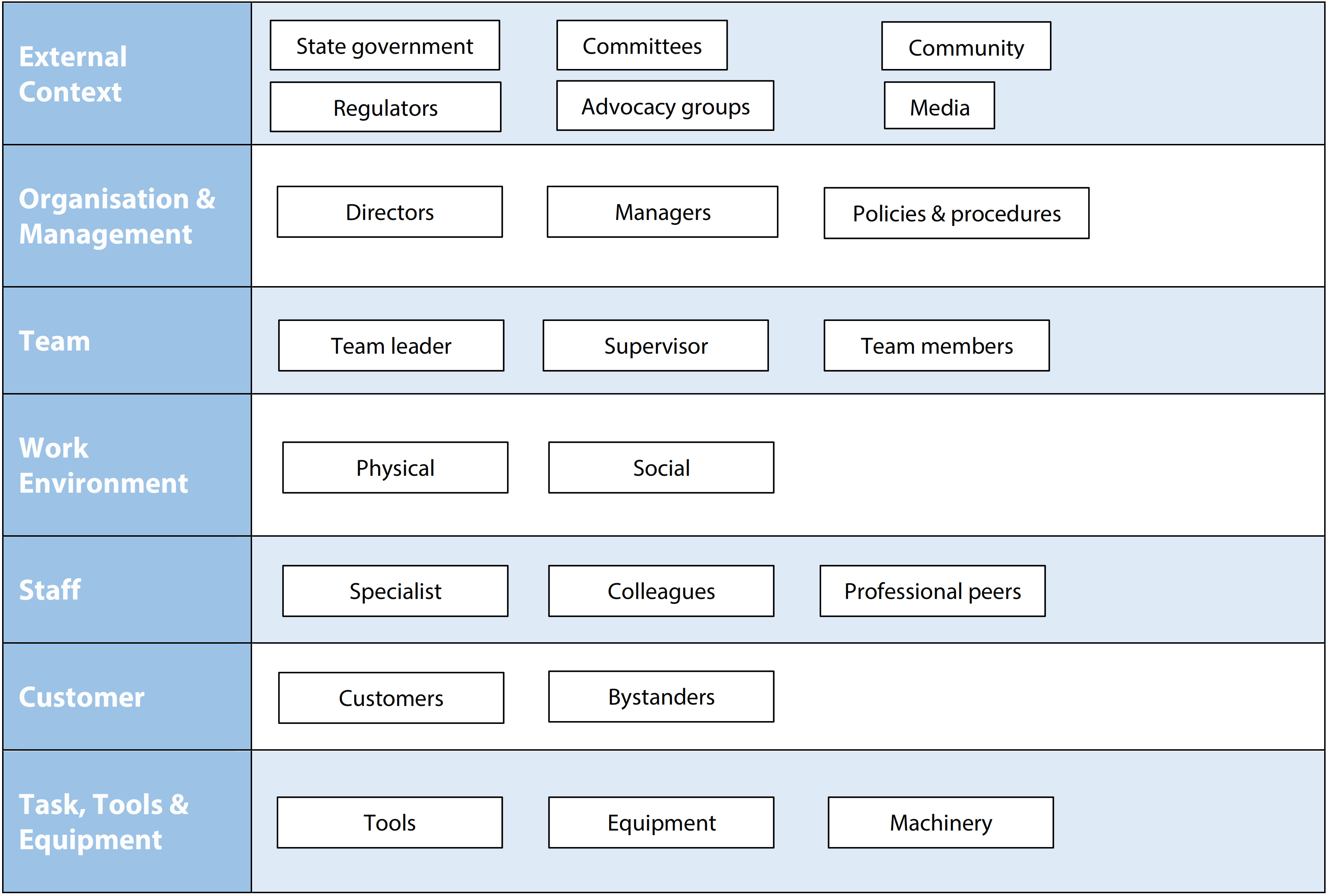

One of the starting points can be to map the ‘who’ (staff, customers, public) and ‘what’ (equipment, environment, guidelines, procedures) parts of the system onto the ‘layers of the onion’ (see Figure 3 for a high-level domain agnostic example) or in swim lanes representing the layers (similar to an Actormap (Rasmussen, 1997)).

Without systematically and proactively identifying the ‘who’ and ‘what’ across the system, there may be a first missed opportunity to keep a systems lens in a review.

What factors may have played a role in the event or contributed to the severity of the outcome?

Factors that contributed to the incident or accident, identified through interviewing and data collection, can be mapped onto the ‘onion’ model or swim lanes representing the system. This not only helps visualise the data and start the analysis but also helps to spot any gaps in the analysis, e.g., when layers remain empty.

Contributing factors frameworks exist for most domains and they can be a useful strategy to prompt consideration of potential factors within each layer of the system and think beyond what is currently known or identified. Contributing factors frameworks can also be used to analyse factors identified.

An Example Scenario

Imagine this fictitious example – a health service is reviewing a missed diagnosis and considers the radiologist to be at fault because they did not detect the anomaly. In addition, the radiologist did not have their report double checked, which is against the procedure. The investigation recommends the radiologist undergoes retraining on procedures and that the procedure is re-written to more clearly state that all reports must be double checked.

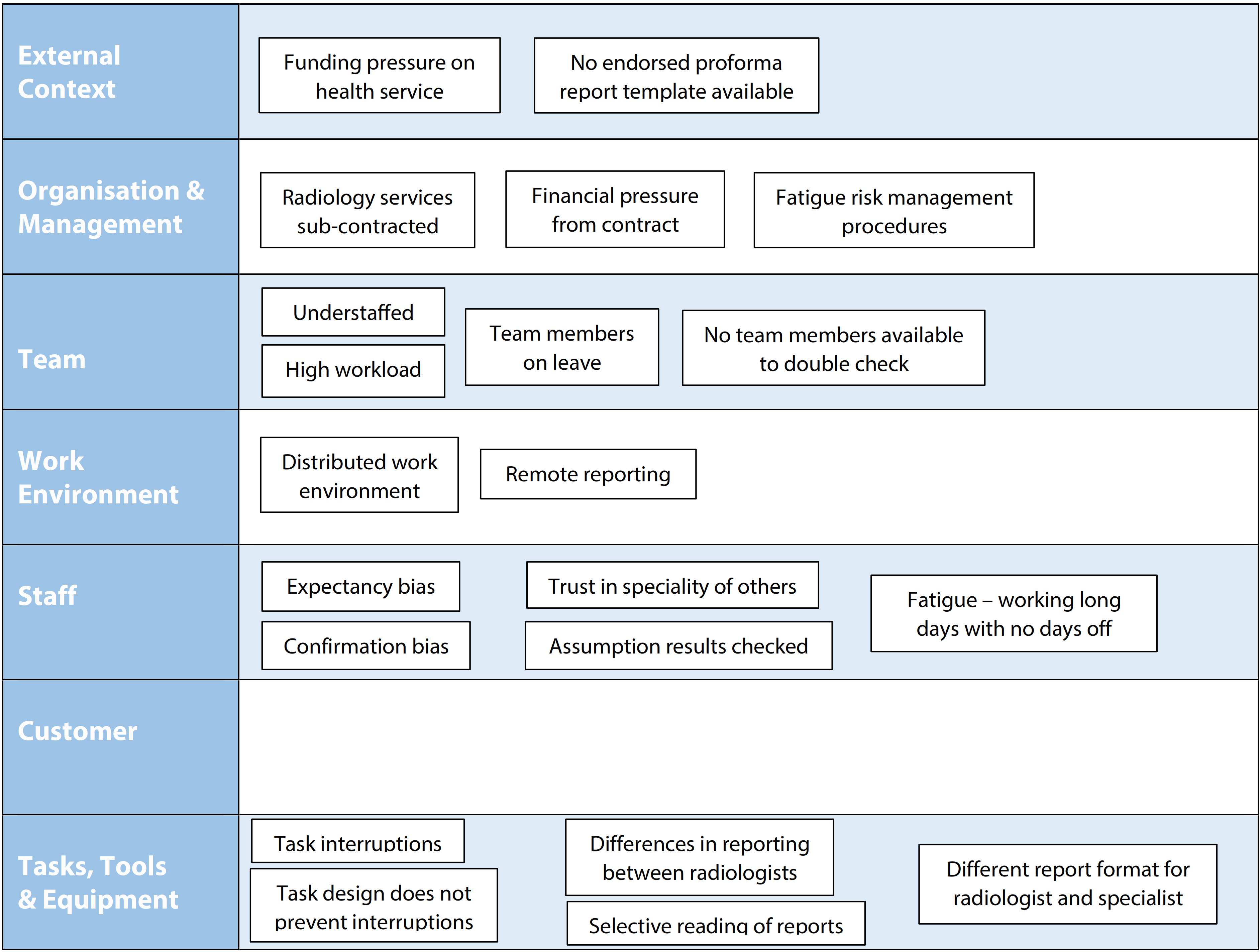

When the health service conducted a systemic mapping exercise of factors that played a role in the incident, the picture that emerged was more complex and involved many more actors.

The systems map (see Figure 4) helped the team focus on all the different parts of the system, not just the radiologist’s actions.

It turned out that everyone involved in the case had the expectancy that the results would be negative. The report confirmed this expectation, therefore not raising alarm bells. Everyone placed trust in others for their specialty and assumed results were checked.

The radiology service was sub-contracted, and the contract resulted in financial pressures. The lab in which the images were reviewed was remote from the health service, resulting in a distributed work environment with no face-to-face communication.

The radiology lab was understaffed and there was a high workload. Radiologists were frequently interrupted by different tasks and requests and the tasks were not designed to protect radiologists from such interruptions. Due to leave arrangements made there were no team members available for double checking.

The radiologist was fatigued, having worked many long days in a row without any days off.

The report was read by multiple people and no one identified the misdiagnosis at the time. Every person reading the report from the radiologist focused on the points that were most important to them.

Upon further investigation it was found that different radiologists reported differently and there was no profession wide standardised proforma; making it more likely that an error would slip in and harder for anyone to pick up inconsistencies.

It was also found that specialists received the report in a different format than the radiologist, making any conversations more difficult. Through mapping the contributing factors (see Figure 5), the health service identified themes such as forms and reporting, contracts, staffing and impact on operations, task design and confirmation bias that needed resolutions, rather than fixing the radiologist or the procedure.

Recommendations From Investigations

It may be tempting to quickly find and fix a broken component, whether this is rectifying an obvious technical failure or the dismissal of a person.

However, if the underlying system issues remain unaddressed and only people, policies or technology directly involved in the accident are looked at, the safety issue may occur again for another person, on another day.

The impact of any changes should also be considered across the system, meaning the effect (intended and unintended) that such changes may have on other parts of the system should be taken into account, trialed and evaluated.

Considering What Goes Right Every Day

In more recent years there have been discussions that there should be less of a focus on the 1% of times when things go wrong and more of a focus on the 99% of times that things go right (coined Safety-II; Hollnagel, 2014); shifting the focus to everyday work and acknowledging the reality for those who do the work, day in day out.

Regardless of the discussion about whether this is or is not a different approach to safety, it provides an additional valuable lens for any incident or accident review.

Through observations of work activities and curious inquiry with those who do the same or similar work, it can give investigators an improved understanding of the context of work. This includes the usual conditions, demands and pressures that people deal with and how this is managed on a daily basis. This gives a more nuanced picture. It then allows interpretation of the incident, any contributing factors, learnings and recommendations in the context of the daily challenges.

Miranda Cornelissen | Senior Consultant

Related Content: Human Factors