Once within the domain of science fiction, fully automated vehicles are now very close to becoming a reality. So, what part will safety assurance and regulation play in ensuring AVs can be used safely on our roads?

In the 12 months prior to December 2022 [1] Australia recorded a total of 1,191 deaths on its roads.

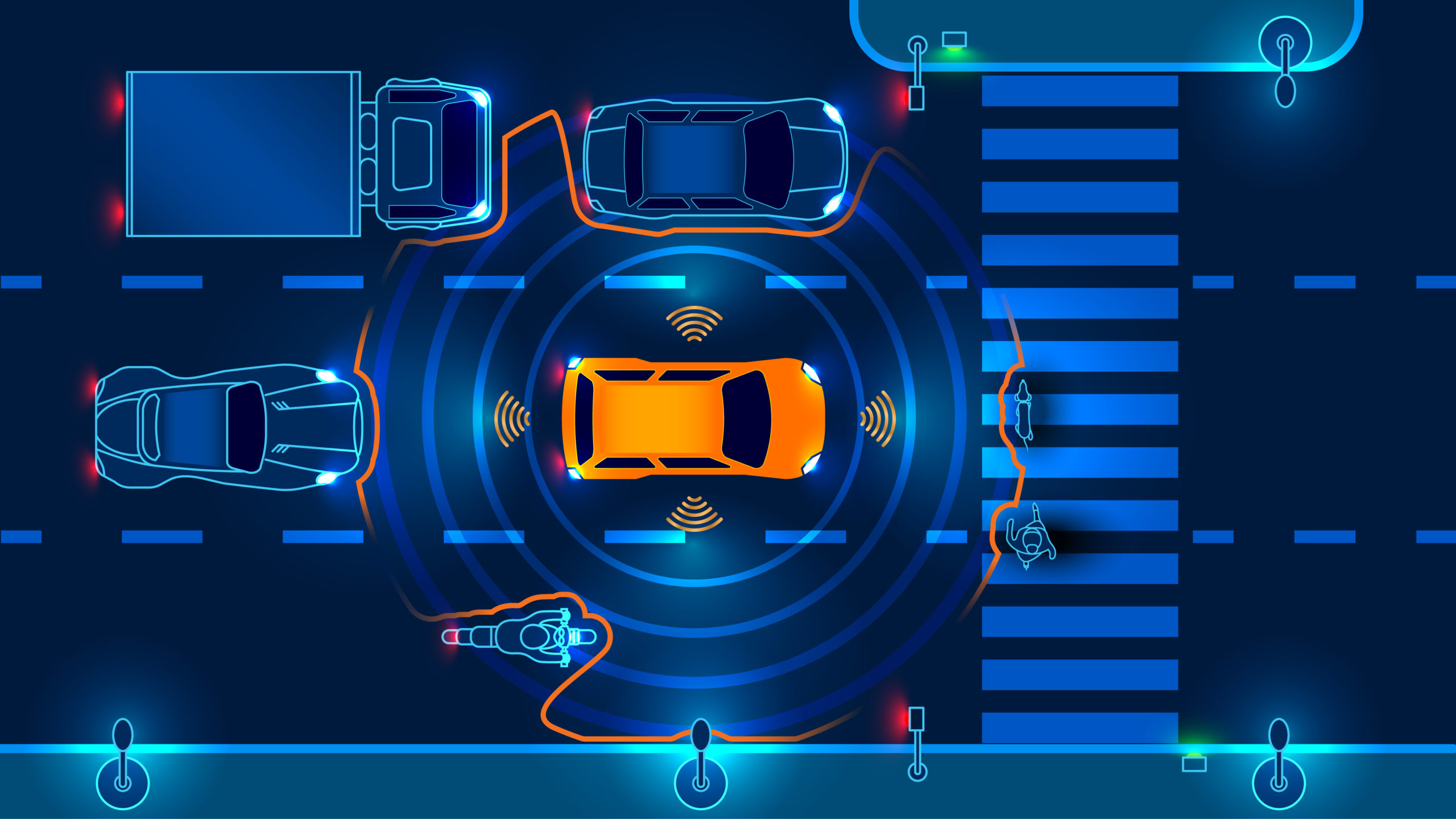

With statistics showing human fallibility consistently contributing to over 90% of all road traffic accidents [2,3], it would be logical to argue that the development and introduction of automated vehicles (AVs) provides an opportunity for safer roads.

However, rather conversely, public concern regarding the safety of AVs is a significant obstacle to the successful introduction of this disruptive technology, driven by artificial intelligence (AI) [4].

Therefore, to fully realise the benefits of automated vehicles, it is critical to understand what role regulation can play in ensuring the new technology is safe and trustworthy, and whether proposed regulation goes far enough?

The Car Driving Paradigm Shift

Most people are aware of the common causes of road traffic accidents [5], such as violation of road, rules, driver impairment, driver distraction, fatigue and general skill and knowledge-based errors.

These factors can be eliminated by removing the driver from their position of control and replacing them with an automated driver system (ADS), as has been done in other regulated transportation sectors such as rail and aviation.

However, even if we remove the driver from the primary control of the vehicle, humans still play a significant part in vehicle operation. Upstream in the design process, factors such as software and system design, defined responses in specific scenarios, infrastructure suitability and artificial intelligence deployment will still require development by engineers.

Systematic errors in the design or issues with how a vehicle is operated and maintained may lead to safety incidents throughout the lifecycle of the vehicle. Many of these potential issues are unknown and may only present themselves through unexpected and undesired events.

For the past 100 years, since horse-drawn vehicles became generally redundant with the introduction of the internal combustion engine, little has changed in the way that motor vehicles have been regulated and operated, or indeed the types of hazards which exist.

As a result, cultural norms, concerning driver competence, liability, insurance, moral conscience and ethical landscape have been very much engrained in society, with regulation aimed primarily at the person driving the vehicle, through licencing, testing and punishment for violating road rules.

Public Safety Concerns

Vehicles with automated functions are already on our roads [6]. Examples include vehicles with driver assistance, adaptive cruise control, automatic lane keeping or parking assistance, with the law presently based on the idea that the driver is still in control when using a vehicle in one of these automated modes.

Until all vehicles used on the roads are automated, with initial technology errors and issues resolved and the infrastructure in place to support the technology, there will be an indefinite period where a mix of manually operated and automated vehicles will co-exist, which may cause uncertainty and safety risks.

To attempt to allay public safety concerns, five main areas need to be addressed [7] to ensure that road vehicle safety is maintained and hopefully improved, through the change from manual to automated driving. These five areas are:

- Safety assurance of design

- Liability and responsibility for safe operation

- Human factors and cognitive response

- Security and privacy

- Ethics.

The following examples highlight some of the challenges facing the industry:

Safety Assurance of Design: Existing safety assurance and integrity methods may help identify the known hazards and uncover some yet unknown issues.

However, due to the speed of technological advances, there will be unidentified issues that have not been considered in design or performance and with an infinite number of variables to compute for any vehicle scenario.

Therefore, traditional safety assurance processes might not be adequate. Understanding failure modes, computer vulnerability, reliability of systems, configuration management, verification and validation of design and safety requirements management are all key to being able to mitigate the risks. But do they go far enough?

Liability and Responsibility Landscape: Traditionally, if a driver violates the road rules, they are liable. However, consider the scenario where a new school is built with new speed limits introduced around school hours, reducing the limit from 60- to 40kph. A pedestrian is hit and fatally injured by a car travelling at 60kph in automated mode. Upon investigation, it is found that the vehicle maps had not been updated to capture the new speed limit.

So, where does liability for the incident sit? Within this scenario, there are many variables such as: the automatic software updating would require network or wi-fi connectivity.

The new speed limit domain needs to be added to the GPS mapping, the operator of the vehicle may not be the owner, and they may not be aware of a map or software update. Updates may also be managed by a fleet or leasing company for alternative ownership options.

Therefore, the management of driver aids such as Sat-Nav and GPS connectivity to the automated driving system can now become a safety-related function. With multiple stakeholders involved, the question becomes: who is responsible for maintaining the effectiveness of that safety function?

Human Factors: Consider the scenario of a person travelling in an AV while in automated mode. Due to a system failure, or scenario that the computer cannot resolve, the operator may be required to take back control.

However, from research undertaken in the aviation sector, it can take some time for the operator to establish a full cognitive understanding of the situation. During this time the vehicle has travelled some distance down the road in uncertain circumstances.

Further to this, different brands of vehicles may have different protocols or methods to alert the driver or different actions required by the driver. In conventional vehicles, the driver is legally in control of the vehicle, with competence assessed through testing or experience.

So how is the competence of the human assured to do the right thing when combined with the different skills required when riding in an AV?

Security and Privacy: Vehicle computer systems, like any computer network, have vulnerabilities and fallibilities. Traditionally, without the need for connectivity, cyber security hasn’t been a significant issue for the automotive industry, but as the connectivity of vehicles increases so too does the threat.

As with other areas of technological development, people are becoming increasingly concerned over what data is held by organisations and the ability for such data to be used for criminal or unethical intent.

For automated vehicles to be fully connected, data on movements and locations will be utilised, therefore where people have been, and when they went there, will be recorded. There is also the real-world threat of hacking with AVs potentially being immobilised and held to ransom, due to the fact their computer systems will need to be connected to a network.

Ethics: Ethical aspects of motor vehicle transport is something which has not previously been a significant issue due to most incidents requiring reactive actions of the driver, based upon their moral conscience. There has been considerable research [8] on artificial intelligence and the relationship between human morals and pre-determination of an outcome which may affect the life of a human. For example, if an automated vehicle is about to crash into another vehicle, the AV can choose to veer off its trajectory and avoid the collision.

However, in circumstances where avoiding the collision will result in the vehicle hitting a pedestrian, for example, the AV will be faced with the choice between saving the occupants (and endangering the pedestrian) or continuing on its current course where the vehicles will collide, possibly resulting in multiple fatalities.

Deciding what an AV does in these circumstances becomes a pre-meditated and deliberate act. Whether through software programming or using Artificial Intelligence, computers and system designers will ultimately be making decisions about human life, instead of a spur-of-the-moment reaction from a human driver based upon what they think is the right thing to do.

An intrinsic factor of motor vehicle development has been to protect the vehicle occupants through airbags, seatbelts, impact protection, and crumple zones, with some minor development to protect other vulnerable road users. Would this direction continue in AI applications, meaning cars could be marketed to protect the driver over other road users?

In addressing each of these aspects, a change to existing protocols, policies and processes will need to be considered. Regulation and the regulatory landscape are required to provide a definitive guideline of the legal requirements of each of these areas. However, regulation is a work in progress concerning AV development and through-life operation in Australia.

Proposed Regulation

The Australian National Transport Commission has published several consultation documents and reports over the past few years through its Automated Vehicle Program [9], including its regulatory framework for Automated Vehicles in Australia [10]. As the technology has progressed, government bodies realise that action will be needed as the paradigm of motor vehicle operation shifts.

Other nations, such as the UK, are taking a similar approach [11], where specific agencies and regulators will be created to define new laws and shape the regulatory framework to ensure that automated vehicles placed on the market are safe to be there. The common approach is by using a type approval scheme, again like other transportation sectors such as rail and aviation.

In Australia, it is proposed that three new regulators will be created to oversee the automated vehicle industry. These will include a regulator for “first supply” of vehicles onto the market, in-service regulator and state and territories regulators, who will define laws locally. The scope of the regulators will be:

First supply will concentrate on the type approval of new vehicles entering the market, putting the onus on the Automated Driving System Entity (ADSE), which will typically be the vehicle manufacturer, to consider areas such as:

- Safe system design and validation

- Operational Design Domain (ODD) of the vehicle, i.e., the parameters and constraints in which it designed to operate

- The vehicle Human-Machine Interface (HMI)

- The ability to comply with road traffic laws

- How vehicles will interact with emergency services vehicles

- The minimal risk condition of the vehicle, defining how the vehicle can be brought to a safe stop when in automated mode

- On-road behavioural competency

- Installation of system upgrades

- Verification of the vehicle for the Australian road environment

- Cyber security

- Education and training.

Once in-service the relevant regulator will require the ADSE to:

- Maintain general safety duty through the lifecycle of the vehicle, or more specifically the automated driving system

- Identifying and mitigating risks that emerge through the operation

- Ensuring system upgrades happen and that they do not result in new safety risks

- Provide education and training to users

- Maintain records and ensure accountability to demonstrate compliance.

States and territories regulator will be responsible for the local and human elements, including:

- Vehicle registrations

- Road management

- The human users of automated vehicles including driver regulations and licencing, including the competence of users in taking back control of a vehicle.

While the proposed regulations cover many elements of public concerns, the same cannot be said for the ethical considerations of AVs. Ethics in Artificial Intelligence is considered by the Australian Government [12] but not necessarily in a prescriptive manner when it comes to the deployment of AI in AVs.

The underpinning technology on which AI sits is not perceived as knowledge that most drivers would be familiar with, compared to learning to drive oneself. As a result, many people do not understand how the technology behind AI works in an automated driving system [14] with it still being viewed as a relatively recent addition to our day-to-day lives.

Given that the driver is presently the focus of most regulation [13], then it would seem reasonable that the AI which replaces the driver should be subject to a similar or higher level of scrutiny, assessment and accountability.

A voluntary set of principles does exist as a cross-industry framework, outlining things that an AI system should consider, but not how an AI system shall behave to be compliant under AV regulation.

Conclusion

Proposed AV regulations in Australia may have identified and considered many of the topics of concern to the public. For safety of the design, demarcation of responsibility and liability, cyber security and the human interface aspects, there are proposed regulatory elements that one would expect, which may go some way to allaying the concerns of the public in the adoption of this new technology.

Fundamental to the proposed regulatory approach, design safety shall rely upon robust safety assurance and systems engineering techniques. The Human-Machine Interface, training and education will require the skilled application of human factors methodologies, not just in ergonomics but in the psychology involved in humans interacting safely with machines. These elements have been present in the development of automation in other transportation sectors, such as aviation, rail and space.

However, with AI being the transformation which effectively replaces the role of the person as the driver, then perhaps the ethics and fundamentals of how it operates is an area which requires more consideration. As it is presently the driver that is subject to the road rules in how they operate a vehicle, then it could be argued that the AI should be subject to the same level of accountability, with liability and responsibility placed on those who are developing it and with sufficient ethical scrutiny applied to how AI performs in AVs.

Campbell Sims | Senior Consultant

Related Content: AI and Requirements Management

References

- Bureau of Infrastructure and Transport Research Economics (BITRE), (2022), “Road Deaths Australia”, Available at: https://www.bitre.gov.au/publications/ongoing/road_deaths_australia_monthly_bulletins

- UK Government, (2022), Reported Road accidents, vehicles and casualties tables for Great Britain. Available at: Reported road accidents, vehicles and casualties tables for Great Britain – GOV.UK (www.gov.uk)

- Treat, et al (1977), “Tri-level Study of the Causes of Traffic Accidents”, Washington, US Department of Transportation

- Tennant, C., Stares, S. and Howard, S. (2019), “Public discomfort at the prospect of autonomous vehicles: Building on previous surveys to measure attitudes in 11 countries”, Transportation Research Part F: Traffic Psychology and Behaviour, 64, pp. 98-118.

- Budget Direct, (2021), “Car accidents survey & statistics 2021”. Available at: https://www.budgetdirect.com.au/car-insurance/research/car-accident-statistics.html

- Society of Automotive Engineers, (2021), SAE Levels of Driving Automation https://www.sae.org/blog/sae-j3016-update

- Sims, C., (2022), “Regulatory Approach to Safety Systems using Artificial Intelligence in Automated Vehicles”, Australian System Safety Conference 2022. Available at: https://www.ascsa.org.au /_files/ugd/00ef47_ 6f9cb01295d141e6b336b248d0f8c54d.pdf

- Cunneen, M., Mullins, M., Murphy, F., Gaines, S., (2019), “Artificial Driving Intelligence and Moral Agency: Examining the Decision Ontology of Unavoidable Road Traffic Accidents through the Prism of the Trolley Dilemma”, Applied Artificial Intelligence, 33:3, 267-293

- National Transport Commission, (2020), “Automated Vehicle Program Approach”. Available at: https://www.ntc.gov.au/sites /default /files/assets/files/Automated%20vehicle%20approach.pdf

- National Transport Commission, (2022), “Regulatory Framework for Automated Vehicles in Australia”. Available at: https://www.ntc.gov.au/ sites/default/files/assets/files/NTC%20Policy%20Paper%20-%20regulatory%20framework %20for%20automated%20vehicles%20in%20Australia.pdf

- UK Law Commission, (2022), “Automated Vehicles Joint Report”. Available at: https://www.lawcom.gov.uk/document/automated-vehicles-final-report/

- Australian Government, Department of Industry, Science & Resources, (2022), “Australia’s Artificial Intelligence Ethics Framework”. Available at: https://www.industry.gov.au/data-and-publications/australias-artificial-intelligence-ethics-framework/australias-ai-ethics-principles

- Parliamentary Counsel’s Committee, (2021), “Australian Road Rules”.Available at: https://pcc.gov.au/uniform/Australian-Road-Rules-10December2021.pdf

- Garcia, K., et al, (2022), “Drivers’ Understanding of Artificial Intelligence in Automated Driving Systems”. Journal of Cognitive Engineering and Decision Making, Volume Online First: 1 – Jan 1, 2022, PP.1-15.